Buzzwords are very fancy terms that we love to use. Everyone heard buzzwords though their carrers, right? Well it’s not different in DevOps. In fact, it’s full of these jargons which are essential to understand it.

I want to clarify for you the meaning behind some of them. I hope that DevOps conceps and practices will become more relatable as you read this article.

The most important ones

1. Let’s begin with “DevOps” itself:

In short, it covers the activities in software that:

- Reduces delivery time

- Automating non-productive or repetitive tasks — Connects business units or departments to improve communication

- Offers a stable and scalable infrastucture

- Saves costs associated with development and operations

Its ultimate goal is to achieve greater satisfaction from users and the business.

I wrote an entire article about DevOps which you can read here [LINK].

2. Pipeline:

Pipelines are automated sequence of steps that code changes go through. It spreads from development to deployment. Pipelines ensure consistency, quality, and speed in delivering software.

Imagine you have a software and you want to roll out new versions every two weeks. You need to replicate building and automate system tests for better quality control. Also, you need to version and save each output package for later (manual test and deployments).

Implementing good pipelines will result in:

- Faster process

- Zero manual errors

- Better quality control

- Faster feedback

3. Continuous Integration (CI):

CI is a practice that facilitates the process of code integration.

They automate the building and testing of code changes when they get pushed to a repository.

Imagine that you have a team where each developer is working on a specific feature or bug fix. You want to ensure that changes are easy to integrate and they don’t introduce new issues. This is where CI comes into play:

- You make a Git code repository. There is a shared main codebase with individual branches for new features.

- You create a CI process for devs to try and test new features. You also enable integrating their changes back to the main codebase.

- The CI process detects every code change and runs immediately.

- It compiles and analyzes code, then runs tests and even reports their results.

- Upon merging the feature branches back to the main code run special criterias. This can mean more tests, manual approvals or even automatic merging.

4. Continuous Deployment (CD):

CD takes the previous (CI) concept one step further. It deploys code changes to various environments as soon as they pass automated tests. Environments can include the developer test, user acceptance test and production.

CD is applied when developers commit code changes. When all tests pass, the application is immediately deployed to the stage-specific servers. All without manual intervention. This reduces the time it takes to deliver new features and bug fixes to end-users.

This concept enables frequent releases while ensuring the safety of production environment.

We also have subcategories of CD:

- Rollback CD, meaning we keep each already released version as state, so it’s easy to roll back.

- Canary CD means that we deploy 2 versions at the same time and distribute the requests between them. Let’s say 80% of the traffic goes to the existing endpoints, 20% to the new ones.

5. Infrastructure as Code (IaC):

IaC is the practice of managing infrastructure using code and automation tools.

There is a better approach then setting up servers and configuring them one by one, by hand. Coded infrastructure is versioned, tested, and made for easy replicating and scaling. Ensures consistency and reliability.

IaC also has two subcategories:

- Declarative IaC specifies the desired end state of the infrastructure. All without prescribing specific steps.

Tools used: Terraform, Azure Resource Manager (ARM), AWS CloudFormation, Puppet, Salt, etc. - Procedural IaC (also known as “Imperative IaC”) outlines the exact steps to reach that state.

Declarative IaC is more favored because it’s more abstract and less prone to errors.

Tools: Chef; Azure CLI (AWS CLI or any other CLI) with pipelines.

Note that both Ansible and Salt have some procedural support.

6. Scalability:

In business, you need to expand your services to meet demands without compromises. In DevOps, Scalability is the digital system’s capability to adapt and expand on the need. Like providing a stable platform or scaling up servers as soon as possible. We want exceptional and seamless user experience. Of course, while maintaining performance even under heavy loads.

Imagine an online store during holiday season that expects 5x more traffic. The teams needs to ensure quick response times so all orders can be recorded. There is two easy solution:

1. Install more servers for this time period, which can be entirely automated.

2. Use metrics to dynamically scale your servers without any manual action. This solution is best when peek periods are unpredictable. Also, be careful and always limit scaling with this option to avoid unexpected costs.

7. Microservices:

Microservices is an architectural approach. Complex applications are broken down into small, independent services that communicate over APIs.

Monoliths handle everything in one huge application. Contrariwise, microservices divide the software into separate independent parts. A microservice should be independent in development, testing and deployment. Changes only affect one part of the software, making it easier to maintain, scale and develop.

To achieve this, each service focuses on a specific business capability. Services communicate with each other using lightweight protocols which enables technological diversity. Like HTTP, which is supported in every programming language and framework.

There is also asynchronous communication between services, which is used for decoupled and fault-tolerant scenarios. A popular implementation, RabbitMQ leverages event-driven communication and can store messages in queues until they are consumed.

8. Containerization:

Containerization is a lightweight form of virtualization. It packages applications and their dependencies together for consistent deployment.

Docker is the most popular containerization tool which you might heard about. The containers are consistent and are able to run on different environments. All without worrying about the differences in underlaying infrastructure.

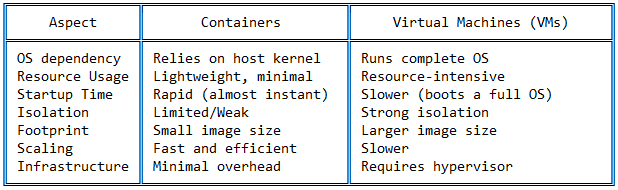

We often contrast VMs with Containers since they are similar but not the same. I think it’s also easy to explain containers this way:

9. Orchestration:

Orchestration refers to automated coordination and management of complex tasks, processes, and workflows. It handles various components of an application or infrastructure. Helps streamline and optimize the deployment and management of software. (Especially in distributed and containerized environments).

Some of the tasks orchestration automates is deployment, scaling, resource allication and uptime management. The most common orchestration platform is Kubernetes. (Including all platform built on it, like Openshift, EKS, AKS…).

Imagine you have several containers running various parts of your application. Orchestration distributes these containers across servers. You set up metrics and rules to enable scaling up or down based on demand. You use health probes to maintain availibility, volumes to keep stateful data if necessary.

In Kubernetes, you can use .yaml files to interact with your containers, network, volume and other resources. It’s a common practice to make a reusable template of these files and store them in version control (Git). This way you make your Kubernetes application easy to replicate. You can learn more on this later in a new article!

10. GitOps:

A modern approach to managing and automating the deployment and configuration of infrastructure and apps. It revolves around Git repositories as “source of truth” for defining the desired state of systems.

Imagine Git in the centrer system of your DevOps operations. Just as microservices break down monolithic applications, GitOps breaks down traditional operational. In GitOps, everything is defined in version-controlled repositories. It is very convinitent for everyone, since Git is the most used tool when it comes to version-control. I highly recommend implementing this concept for every IT Engineer.

Main benefits of GitOps:

- Version control as backbone: Git ensures that all changes to the system is tracked and is complient to software development procedures.

- Automation: You can set up CI/CD continously monitoring for changes in the desired state. This way you can immediately apply the latest changes on any environment as soon as the code is uploaded to version control.

- Collaboration: Since the system’s desired state is stored in Git, it enables collaboration from multiple members and teams. This helps transparency and shared responsibility for the system.

- Security: Policies are easier to enforce due to version-control. Only authorized members are able to read or modify the ‘source of truth’.

Closing the Loop: Your Questions Matter

As we conclude this topic, I want to emphasize that your questions and curiosity are invaluable! We’ve only scratched the surface of an ever-evolving field, and there’s always more to discover…